Artificial Intelligence: Still a Long Way from Judgment Day

The 1991 sci-fi film Terminator 2 predicted that artificial intelligence (AI) software known as Skynet would become self-aware on August 29, 1997, and rapidly take over the world.

Today, even as we celebrate avoiding such an extreme outcome for 22 years and counting, we’re continually scanning the field for new developments in the world of AI. Read on, and listen to our podcast with GAO’s Chief Scientist and Managing Director, Tim Persons, to learn more about our work in this area.

The current state of AI

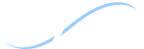

AI is far from developing into anything like Skynet. That would require AI technologies with broad reasoning abilities, sometimes called third-wave AI, which are highly unlikely in the foreseeable future.

Currently, the most advanced AI is still in its second wave, driven by what’s called machine learning—in which algorithms use massive datasets to infer rules about how something works with little to no human guidance. In comparison, the first wave implemented rules created entirely by humans.

AI uses in business and government

The overarching potential benefit of AI is to relieve people of routine tasks, which could help deliver better products and services at lower cost. So far, machine learning has figured out the rules to voice recognition, facial recognition, movie recommendation, medical scan analysis, and answering questions shouted into a smart speaker.

And our 2018 technology assessment on AI highlighted even greater implications for businesses and government in the near future. For example:

- In financial services, AI-driven chatbots and call centers could meet customer needs faster. And AI software could monitor transactions for fraud in real time.

- In cybersecurity, AI could help identify and fix vulnerabilities.

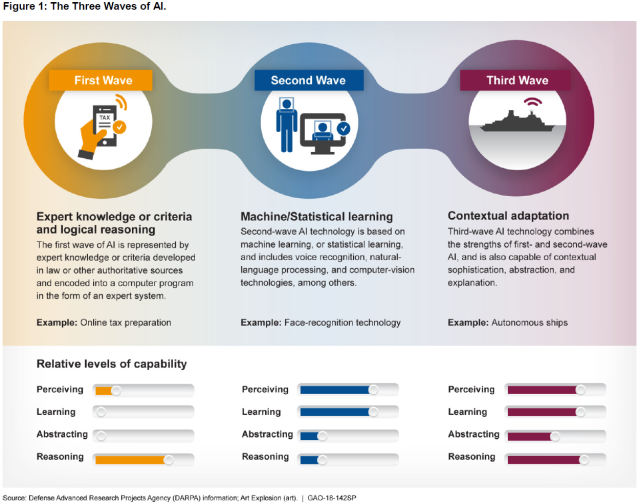

- In transportation, automated cars and trucks could improve highway safety, reduce delivery costs, and increase our mobility.

AI also has the potential to transform health care. In fact, we have a series of technology assessments underway in that sector, thanks to our expanded capacity to look toward future developments with the launch of our Science, Technology Assessment, and Analytics team earlier this year.

In addition, we recently reported on how insurance companies use AI and other technologies, and how those uses might be regulated, as well as how the Department of Labor could better track the effects of AI technologies on jobs.

Is AI too good to be true?

Even with the possible improvements that AI may bring, we’re keeping an eye on the associated risks, too. Such risks are often related to the large amount of data that machine learning generally requires. One risk is that when data about people are collected and aggregated, they could be used in ways that might not benefit them. For example, data from medical records might be used to deny at-risk individuals insurance or employment.

Another risk is that biases in the data could lead to biased outcomes. For example, AI is under development to assist in criminal sentencing, and it’s possible the resulting recommendations might be harsher for certain racial groups. Any such bias may be difficult to detect, however, since most machine learning algorithms are “black box”--meaning users cannot understand the reasons behind their decisions or recommendations.

Much like the end of Terminator 2 (spoiler alert!) where the benevolent AI-driven robot played by Arnold Schwarzenegger, defeats the evil AI robot, we have a similar, if less dramatic vision: a future in which the nation takes advantage of this powerful technology while minimizing its risks.

- Comments on GAO’s WatchBlog? Contact blog@gao.gov.